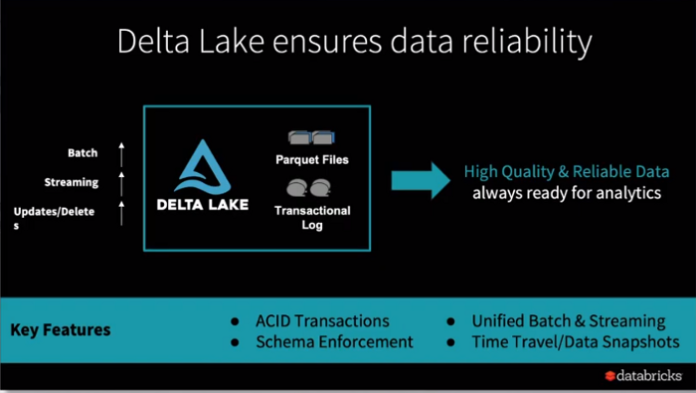

DL is a storage layer for your data lake that provides stability, security, and speed for both streaming and batch operations in an open format. Delta Lake lays the groundwork for a low-cost, highly scalable lakehouse by eliminating data silos and providing a central location for all data types.

Top-notch, trustworthy information

Assure your data teams are constantly working with the most up-to-date information by providing a centralised hub where all your data, including real-time streams, can be stored and accessed confidently. You can now perform analytics and other data projects straight on your data lake, reducing project cycle times by as much as 50 times.

Free and safe exchange of information

Delta Exchanging is the first open protocol in the industry for securely sharing data, making it easy to collaborate across enterprises no matter where their data resides. Using the Unity Catalog, native integration enables streamlined administration and accountability for company-wide data sharing. Improved business coordination enables you to reliably exchange data assets with suppliers and partners while satisfying security and regulatory requirements. Thanks to the platform’s and tool’s integrations, you may use the tool of your choice to view, query, enhance, and control shared data.

Free-flowing and nimble

DL stores all data in the freely available Apache Parquet format, which may be accessed by any reader that supports that format. The APIs are available to everyone and are fully functional with Apache Spark. When running Delta Lake on Databricks, users may avoid the pitfalls of proprietary data formats while still having access to a comprehensive open-source ecosystem.

Results in the blink of an eye

Since Apache SparkTM powers it, DL is capable of enormous scalability and performance. And since it is performance-optimised with features like indexing, Delta Lake users have seen ETL workloads run up to 48x quicker.

Safe and reliable data automation

Build and maintain data pipelines for new, high-quality data on DL without effort using Delta Live Tables, which streamlines data engineering. It aids the lakehouse construction process by facilitating data engineering teams’ declarative pipeline building, enhanced data dependability, and cloud-scale production operations.

Scalable security and administration

DL mitigates danger by allowing for granular control over data access which is usually impossible in data lakes, which is essential for ensuring proper governance. With audit logging, you can update your data lake promptly to meet the requirements of legislation like GDPR and to improve data governance. Databricks’ Unity Catalog, the first multi-cloud data catalogue for the Lakehouse, naturally integrates and improves upon these features.

Use Case

Analysing your data using BI

By executing BI workloads directly on your data lake, you can ensure that your data analysts have rapid access to all new, real-time data to glean actionable insights into your business. To get up to 6x higher price/performance for SQL workloads than conventional cloud data warehouses, DL enables you to run a multi-cloud lakehouse architecture with data warehousing performance at data lake economics.

blend batch processing with real-time input

Use a single, streamlined architecture to handle batch and streaming activities without hassle with redundant systems or complicated workflows. DL’s tables may be used as a batch table, streaming source, or sink. Spark Structured Streaming is natively compatible with streaming data ingestion, historical backfill in batch, and interactive queries.

Assert conformity with rules and regulations

Problems with corrupted data intake, erasing data for regulatory compliance, and editing data during change data collection are solved with Delta Lake. DL’s ACID transaction support guarantees that all operations on your data lake either complete successfully or abort for subsequent retries without the need for custom data pipelines. In addition, DL keeps track of everything that has ever happened on your data lake, making it simple to retrieve and repurpose older versions of your data as needed to adhere to regulations like GDPR and CCPA reliably.